Ethics programmed into robots may not solve the problems we face at work, in healthcare, or in wartime.

The relations between rhetoric and ethics are disturbing: the ease with which language can be twisted is worrisome, and the fact that our minds accept these perverse games so docilely is no less cause for concern. - Octavio Paz

Humans are on the fast track to outsmarting ourselves, and we are making it all possible with AI and robots. As Andrew Yang has said, “Automation is no longer just a problem for those working in manufacturing. Robots replaced physical labor; mental labor is going to be replaced by AI and software.”

But above and beyond the abilities we give or will give to automated “beings” is the question of ethics and how we will foresee what we have wrought with our lack of insight regarding ethics. As with everything, we need to know when to stop something, but our feverish wish to create ever more ingenious machines is now a cause for concern. Do we see it, or do we deny it?

Christine Fox, at a TED conference a few years ago, admitted that we have “a toxic brew” where advanced technology “is available to anyone who wants to buy them with few if any constraints over their development and accessibility.”

While technology is not to be hogtied in its advances, there are both advantageous, simple apps that can help link people in need with caregivers. However, other forms of technology outside our governing ability, such as cyber warfare, with entire divisions of personnel tasked with defending and monitoring internet networks and addressing its use.

Armies have left the trenches, taken to the skies and now they don’t need to leave their home bases to conduct strategic attacks, kill soldiers, destroy cities or blow up anything they wish from thousands of miles away. But what about collateral damage, e.g., civilians?

Today, many technology companies are engaged in setting up something the defense department uses called “Red Teaming.” This group’s mission is to creatively, and adversarially, approach new technology to determine how it could be turned from its intended use to something sinister or problematic. They must also detect where the loopholes are, and calculate the ethics involved. But ethics can be relative, and it is here that the major danger lies.

In other words, it’s the white hats against the black hats to work out the bugs and the vulnerabilities. Which corporations are doing this to address the ethical dilemmas of automation and AI?

Have we gone beyond the skills of Kevin Mitnick? One of Mitnick’s skills that he used very successfully was social engineering. People are vulnerable, and you can distort their sense of ethics if you are clever.

When I taught college students about hypnosis and how it might be used malevolently, I asked if they could be persuaded to kill someone. Resoundingly, they said they couldn’t. But, I offered, if I convinced them that a child was in danger of being killed by someone else, would they defend the child and kill to protect it? A dilemma ensued.

What is the corporate equivalent of war games in technology’s ethical parameters? But is this solely dealing with ethics or protection of a network from an attack? Are ethics malleable according to our needs?

Where Are Our Vulnerabilities and Who Evaluates Them?

Decades ago, the science writer Isaac Asimov provided a guide for the future and ethical robotics. Before he proposed the three laws as a foundation for robotic ethics, he wrote one; Zeroth Law. This law stated that a robot might not harm humanity or, by inaction, allow humanity to come to harm.

Then came Asimov’s Three Laws of Robotics that are:

First Law: A robot may not injure a human being or, through inaction, allow a human being to come to harm.

Second Law: A robot must obey the orders given by human beings except where such orders would conflict with the First Law.

Third Law: A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

The three laws would seem to protect us from unethical robots, but do they? One computer scientist (Max Tegmark) is already predicting that robots with an ability to exceed our intelligence could form a totalitarian state.

As a result of technology advances, we can envision “slaughterbots” instead of the relatively benign chatbot. The killer bot using facial recognition, will fly to someone’s home or office, kill the person and then self-destruct. This dire illustration was provided by Tegmark.

Is It Ethical for AI Not to Identify Itself?

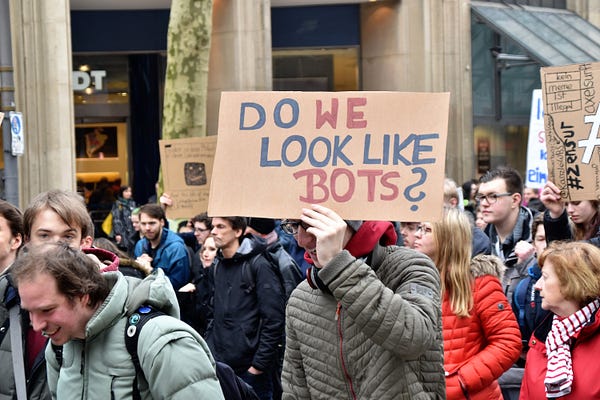

One question which is becoming more evident is AI systems’ mimicking humans so well that we believe we are interacting with a fellow human, not a machine. Have you been fooled yet?

The closer the technology brings AI to emulating humans, their place in our society is further solidified. In fact, in a large international survey sponsored by a technology company, over 60% of the respondents said they’d rather talk to a chatbot than a therapist for mental health difficulties. Their reasons included being anxious and wanting to avoid being judged.

The topic is of some importance to Thomas Metzinger, who wants AI to clearly identify itself as non-human when a social interaction arises. In fact, he is calling for a ban on these machines if they don’t self-identify when dealing with humans.

An example of AI that skirts such a ban would be Google Duplex, which uses human voice technology. Another chatbot that users know is obviously AI is Replika, a bot that scans user texts and begins to develop a neural network learning version to “converse,” or rather text, with you. They tag it as “the AI companion who cares.” Should they be using “who?”

The AI friend allows users to freely talk about their lives, their problems, etc. They think of it as a non-judgmental friend. The idea arose out of a tragic loss of a friend of the developer who realized she wasn’t alone in missing him. He had friends who still wanted a connection, if even an AI one, to him. She and her team sat down and began coding until they had Replika.

Are Chatbots That Emulate Therapists Ethical?

The creator of Replika believes it isn’t a question of ethics at all. She has indicated that they receive thousands of emails from users who say it helped them through depression and trying times. Isn’t that ethical, and doesn’t it follow one of Asimov’s Three Laws?

Philosophers ask if we are not now engaging in a practice that could be seen as questionable if we think about people relying on a higher power that no one knows exists or not. Some pray or communicate with angels or dead loved ones. If it helps, how does ethics enter into it?

But Tegmark isn’t interested in these types of things; he is concerned about danger. The danger he perceives is contained in one question: What kind of world do we want it to be?

And how will we handle our wish for advancement with our need for security from super intelligent things we create to do simple tasks now but can improve themselves in ways we never considered?

Tegmark has stated that “If we bumble into this now, it’s probably going to be the biggest mistake in human history.”

Rather than limit ourselves to thinking about chatbots and therapists, what about AI interfering not only with social media (it can write without human input) but on elections. Convincing citizens to change their thinking and voting patterns could change a country’s governance and bring authoritarianism into being.

The Surge to Merge

The more technology companies gobble up other social media companies, the more concerned we should become. Hasn’t Facebook begun this process? What about Google? Should we be learning from mistakes or planning for mistakes to plan to avoid them?

Hundreds of millions of people can be influenced by AI, far outpacing any attempts by a rational debate by mere humans who may reach a paltry tens of millions if they have the funding.

In the future of AI, will we find paradise or an apocalypse? How we handle our technology now will provide the roadmap to either. What happens when we begin to replace human body parts or brain sections with AI-created products? How much less human and more robotic will we become, and will the process meld us into something evolution never could?

The future, according to Brian Patrick Green, poses two questions:

1) How can we use technology to make it easier to do good?

2) How can we use technology to make it harder to do evil?

For those working in AI and ML who seek to release powerful new technologies on an unprepared world, these questions are inadequately considered. Ideally technologists would govern themselves with the highest ethical standards, and therefore not need any external governance; indeed, helping technologists in this way is what I try to do.

What about robots in warfare? Asimov’s laws could never apply here because warfare aims to eliminate the enemy, and if the enemy isn’t a machine but a human, what is its prime imperative? How are ethical considerations programmed into the robot?

The time is now or should have been years ago, to design virtue and ethics into our AI. The topic is not frivolous nor a waste of time because human life, freedom, and the future of this planet may depend on it.